This post is also available in Dutch.

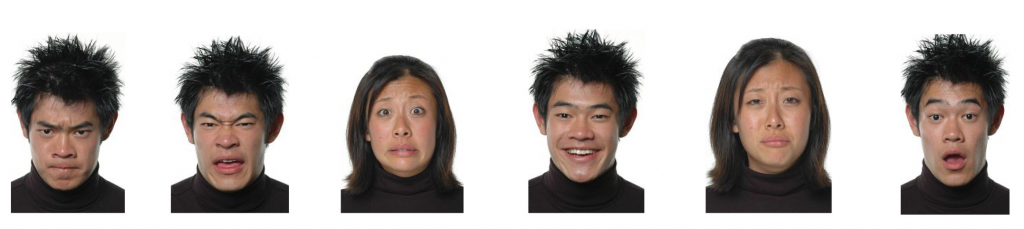

These days, software can do many things that humans do, like recognizing facial expressions of emotion. Computers are trained to recognize facial emotion by processing millions of examples of images paired with labels of emotion categories (such as happiness, anger, or disgust). However, what is surprising, or at least not appreciated enough, is that computers are not really good at recognizing naturalistic facial expressions – the ones that occur outside the lab, in everyday life. Both humans and computers perform rather poorly at recognizing facial expressions that are naturalistic rather than deliberately expressed (for the latter, see the image below).

Beginning in the 1970s, American psychologist Paul Ekman greatly contributed to popularizing the idea that humans universally recognize facial expressions. Ekman and other researchers conducted experiments in which people were shown extreme instances of facial expressions, for instance, a scowling face to indicate anger and a frowning face for sadness. Their results suggested that humans recognize six facial expressions (anger, disgust, fear, happiness, sadness, and surprise) and do so with high accuracy, ranging between 70% and 90%. However, work on facial emotion in the past ten to fifteen years has shown that once people are shown spontaneous facial expressions, their recognition accuracy drops, ranging from 22% to 63%. This is true for not only humans but also computers.

A recent study tested the performance of three automatic facial expression recognition (FER) systems in recognizing deliberately expressed facial expressions and more spontaneous (i.e., more natural) facial expressions. Researchers tested two kinds of datasets of images: (1) actors displaying deliberately expressed facial expressions obtained in a lab setting and (2) facial expressions drawn from movies. For spontaneous facial expressions in the movie images, there was a 40% average recognition accuracy. For comparison, for deliberately expressed facial expressions, there was an 80% average recognition accuracy. Notably, the study also found that the FER systems misclassified many facial expressions as neutral and that there was large variability in recognition accuracy among different emotion categories. For example, the recognition for happiness was the highest, whereas for fear, it was the lowest. The considerable variability in recognition between different emotion categories could be due to the frequency of these emotions in real-life; happiness expressions are more common than those of fear.

Such findings paint a complicated picture of understanding emotions from the face. It is easy to think that computers can be good at or even surpass humans on a particular task. However, one should look at context when determining whether the task is even representative of real-life occurrences. Most facial expressions are not scowls and frowns but those that are subtle and fleeting. Additionally, a lot of facial expressions may not represent clear-cut categories but are blends between different categories. It is alluring to think that software can readily recognize facial expressions of emotion, a claim made by some tech companies developing such software (e.g., Microsoft, Amazon, Affectiva). Yet, one should always take a minute to stop and ponder what facial expressions we are talking about.

Credits

Author: Julija Vaitonyte

Buddy: Judith Scholing

Editor: Christienne Damatac

Translation: Wessel Hieselaar

Editor translation: Felix Klaassen

Image by Gerd Altmann via Pixabay