This post is also available in Dutch.

With the development of more and more ‘artificial intelligence’ (AI), we become more used to offloading our thinking. We have become used to algorithms searching the web for relevant content, providing personalized product recommendations, and replacing border control with automated face recognition. The more we outsource to algorithms, the less we need to think, perceive, and act ourselves. The algorithms are doing it for us.

The same increasingly holds in science. It used to be pocket calculators or SPSS. But nowadays, scientists routinely use sophisticated, so-called machine learning (ML) algorithms to analyze, organize, and interpret data. Complicated computations that we cannot possibly think through ourselves can be performed by computers in mere seconds. It has become easier to train a ML algorithm than it is to reason about whether or not one should.

Prejudiced algorithm

This was painfully illustrated by a paper recently published in Nature Communications. Unthinkingly, the authors created an algorithm that caused outrage on social media. The authors seemed surprised. How could people be so upset? After all, the researchers had merely trained an algorithm to perceive “trustworthiness” in faces just like humans; or, so the researchers thought. The researchers hadn’t realized that they were (unintentionally) reviving the pseudoscience “physiognomy”.

Physiognomy is the outdated idea that personal characteristics (e.g. trustworthiness) can be ‘read off’ a person’s face. In the past, physiognomy has contributed to “scientific” racism and anti-semitism, with disastrous consequences. No scientist wants to accidentally create a modern variant of physiognomy. Yet that is what happened here. How is this possible?

It went wrong in the translation of knowledge in psychology to AI. Psychological research has shown that people form “trustworthiness” impressions that depend on facial features, some of which are under a person’s control (e.g., smiling) but also many of which are not (e.g., distance between eyes, width of the nose, resting position of the mouth, etc.). With the goal of analyzing facial expressions in portraits, the authors of the Nature Communications paper reasoned: if we create an algorithm that mimics human “trustworthiness” impressions, then that algorithm can detect how “trustworthy” someone wants to appear. However, the algorithm, like humans, forms stereotyped impressions based on characteristics that are not under a person’s control. It is physiognomy in a new automated guise.

Showcases of the algorithm have appeared in the popular media, including “trustworthiness” scans of photos of celebrities. It is now the hope that the researchers will counteract the consequences. The algorithm could otherwise become a dangerous addition to the growing list of discriminatory algorithms already used for, for instance, surveillance, border control, and job selections. The editorial board of Nature Communications has started an investigation, and the academic community is awaiting their formal statement on the matter.

Telltale of poor thinking

The case study shows a variety of poor judgments, including insufficient historical awareness and unethical dissemination. But let us focus on one clear telltale of poor thinking here: at its core, the research shows one of the most basic errors in logic.

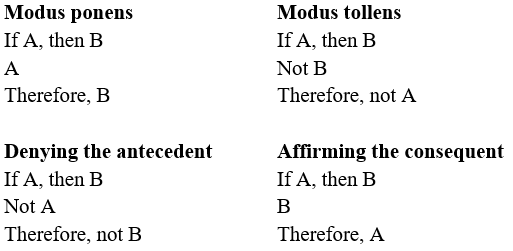

Logic is the study of good reasoning. In any introductory logic class, one learns the two valid reasoning schemes, modus ponens and modus tollens, and the two reasoning fallacies, denying the antecedent and affirming the consequent. The reasoning in the Nature Communications study was an instance of the fallacy of affirming the consequent: “if a person wants to appear trustworthy, then they will display more “trustworthiness” in their face (e.g., by smiling). Therefore, if a person displays more “trustworthiness” in their face (e.g., by having a mouth that looks like a ‘smile’ in neutral position), then the person wants to appear more trustworthy”. That this reasoning is invalid should be evident to anyone giving it some thought and especially to any researcher using AI.

With fancy algorithms at one’s disposal, one may be at larger risk of not thinking things through. At the same time, misconceived algorithms can do more damage, more efficiently and at larger societal scale. Think about it. Don’t let the AI do the thinking for you.

Iris van Rooij

Distinguished Lorentz Fellow at NIAS-KNAW

Computational Cognitive Science, Donders Centre for Cognition

Author note:

This Donders Wonders blog is an adaptation and extension of a letter ‘Pas op met mengen psychologie in kunstmatige intelligentie’ by Iris van Rooij published in the NRC 5-10-2020.

Further reading

– O’Neil, C. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Penguin Books Ltd.

– Agüera y Arcas, B., Mitchell, M., and Todorov, A. (2017). Physiognomy’s New Clothes https://medium.com/@blaisea/physiognomys-new-clothes-f2d4b59fdd6a

Comment:

At the moment of writing this blog, an open letter is being circulated: “Open letter from scientists to the Dutch government on autonomous weapons”

Credit

Original language: English

Buddy: Felix Klaassen

Editor: Marisha Manahova

Translation: Ellen Lommerse

Editor translation: Wessel Hieselaar