This post is also available in Dutch.

You’ve heard of “auditory” and “visual brain areas,” so how does your brain integrate both senses when seeing someone speak? Our evidence suggests the brain does not compartmentalize.

Image courtesy of Pixabay (CC0), modified by Cristiano Micheli.

Have you ever asked yourself why it is so much easier to get hooked watching YouTube videos than reading a book? In a recently published study, we tried to understand why this is by hooking up electrodes to people’s brains to see how the brain integrates two speech modalities: audio and video.

The experiment

In the study we looked at what areas of the brain become activated when people see others speaking. Scientific experiments often use artificial audio stimuli, very unlike day-to-day spoken interactions, when we know that we do not only use our ears to understand speech but also our eyes. Just think of a party or a noisy urban street when we have to make sense of what a friend is saying: the mouth and other visual cues help fill in the gaps created by the noisy environment.

So, how does the brain fuse these two streams (auditory and visual) into one so that we end up perceiving just one thing, that is, a speaking person? We tried to answer this question by recording activity directly from the brain as participants watched a video of someone talking (Don’t worry: we didn’t cut them up for the study. They were hospital patients and the electrodes were implanted to monitor seizures.)

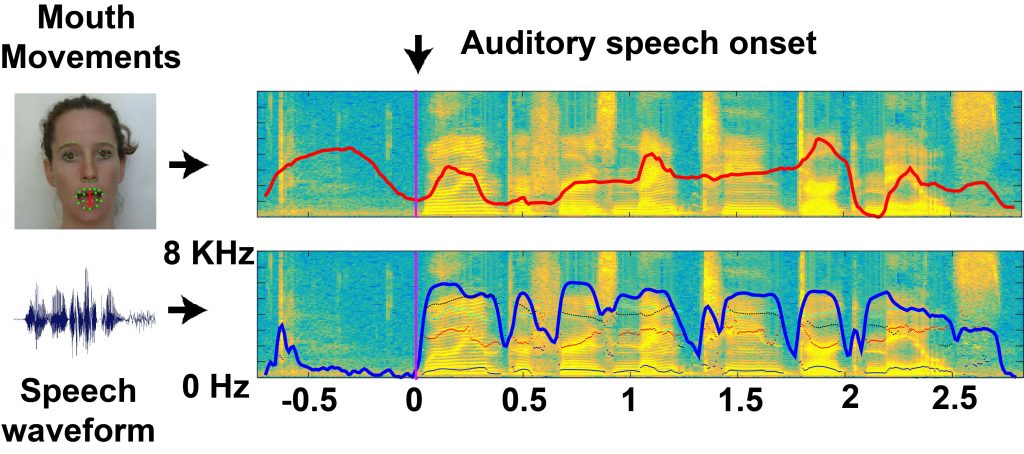

Mouth movements (red) and speech waveforms (blue) are correlated with brain activity to localise the loci of multisensory integration.

Image by Cristiano Micheli.

According to our study, it seems that there is a hub in the brain where information about sound and images come together. This is in the posterior part of the temporal lobe, precisely at the intersection of visual and auditory brain areas. However, things weren’t exactly as we expected: we found that visual stimuli were also represented in temporal areas and audio stimuli in visual areas!

Multifunctional brain areas

Our results question the myth that each sense is processed in a different part of the brain, as used to be commonly thought, and instead support multisensory processing. This also makes sense, right? Why would the brain be divided into compartments? It is simply more efficient for our ‘hardware’ to redirect and duplicate audio and visual streams in many areas of the brain, even if it’s redundant. It’s kind of like when you make several copies of the same file to do different things to each.

So, next time you’re feeling guilty for binge-watching something, take consolation in the fact that the brain evolved to track noisy objects and not to read books. That being said: it wouldn’t hurt you to read every once in a while. You can practice by picking up our paper!

This blog was written by Cristiano Micheli and edited by Mónica Wagner.