This post is also available in Dutch .

Our brain is like a statistician. Based on experience and learning, it is able to predict what we perceive. When different scenarios are as likely, for example, when viewing an ambiguous scene, a competition starts between groups of neurons. Each group has a preferential interpretation of the perceived scene, but only one can win, thereby determining what we see.

Perceptual rivalry

To study the mechanisms underlining this competition (also called perceptual rivalry), the traditional approach is to use an image or video that can be interpreted in two different ways. The phenomenon that then arises is spontaneous switching of perception every 5 to 10 seconds between the two different versions.

In theory this is how it works: the observer gets used to the ongoing perception, leading to a shift of attention towards the second interpretation (new is always more stimulating!), resulting in a change in what we perceive.

Let’s have a look at a more concrete example of perceptual rivalry: motion rivalry.

Motion A + Motion B = Motion C?

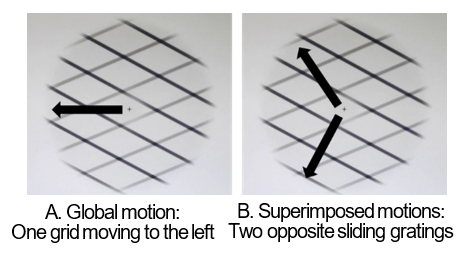

I am going to show you a video that is interpreted 50% of the time as one global motion (fig. 1.A) and 50%, as two superimposed motions (fig. 1.B). If you focus your attention on the cross in the center of the video, your perception of motion should spontaneously switch between the two interpretations. Ready? Click here.

What happens in the brain?

Classically, motion perception is a cognitive function processed along the hierarchy between so-called low-level areas and high-level areas of visual cortex. Previous research suggests that these areas respond to different types of motion: while low-level areas encode only simple motion information, higher areas would be able to combine pieces of information about global motion and thereby detect the more complex motion.

A: low-level processing ; B: high-level processing

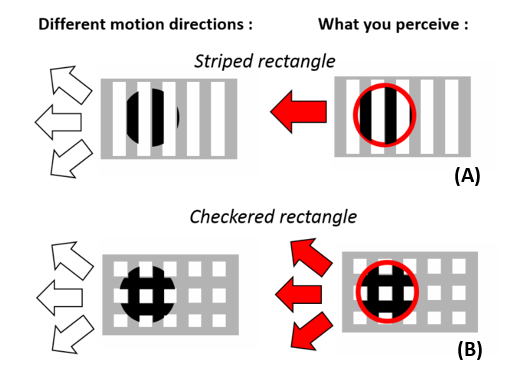

For example, when you see a rectangular striped object moving horizontally (fig. 2.A), then, if I ask you to characterize its motion direction, you need more than one landmark (e.g., a grid) to differentiate different motions of the object (fig. 2.B). This illustrates how our perception depends on what and how visual information is analysed.

Looking at the neural mechanisms behind this, there is evidence that low-level and high-level areas interact in both low-to-high (=feedforward) and high-to-low (=feedback) connections in the visual cortex.

Predicting a pizza

According to the Predictive Coding Theory, this feedback function has been underestimated and is crucial in how we perceive, learn and memorise our environment. Based on previous experiences, our brain is constantly predicting what is likely to happen. These predictions can be more or less accurate and feedback connections help us to adjust them.

Let’s say that yesterday I had pizza for lunch at the canteen. Based on this experience, my representation of pizza is a bread dough with tomato, mozzarella and oregano. If today my friend offers me pizza with cream, ham and mushroom instead, my prior expectation is not confirmed. My brain will have to update my prior representation based on this new representation of a pizza.

This is the concept of the predictive coding theory: First the brain predicts what you are going to perceive. Then, what you really perceive can be coherent (your prediction is confirmed) or incoherent (your prediction is incorrect). In the latter case, a prediction-error signal will be generated to update your first interpretation of the scene.

What are the perspectives?

We have seen that feedback connections (from high-to-low areas) considerably change how we perceive things. However, the function of feedback has also been studied in other fields such as learning, memory, and attention.

Finding out more about the function of feedback connections between brain areas will have direct clinical applications in the understanding of cognitive disorders such as autism or schizophrenia.

Original language: English

Author: Kim Beneyton

Buddy: Eva Klimars

Editor: Monica Wagner

Translator: Wessel Hieselaar

Editor Translation: Jill Naaijen

1 thought on “How to puzzle perception? A debate inside your brain”