This post is also available in Dutch .

BCI applications have often been falsely mystified as ‘thought reading’ machines by the press. Here I try to counter these false expectations by showing how BCI applications (don’t) deliver on that.

The pledge: expectations about BCI control

It is sometimes assumed that a brain computer interface, a technique to read and decode cerebral waves, is also able to read the thoughts of a person by hooking them up to, for example, an electroencephalograph. Here I want to give you my two cents about what it can and can’t do in the quest to debunk this rather common misconception. I chose the example of how the brain controls fingers in the hand because the signal coming from the fingers is strong and can be easily measured with non-invasive devices. Plus I have run an experiment myself on this topic during my doctoral studies. Here is what I gathered.

Scientists have long been trying to harness cerebral waves with the intention to guide computers or robotic arms, but how does the brain drive our own hands?

We need our hands for nearly everything during the day, from showering in the morning and holding a cup of coffee to switching off our bedroom light at night, from playing hardball to doing magic tricks. Interestingly, the brain has perfect control of our fingers, but will a human-made external interface ever learn to steer a robotic hand? Can we play piano without our hands just by hooking ourselves up to a BCI device? For example, compare the BCI expectations of control with what Artificial Intelligence can already achieve in machine dexterity here.

Image courtesy of Mio (license)

BCI can hardly control an external interface directly. Surprise!

The expectation from the general public – complicit with the fluffy newspapers headlines – is that we can control external interfaces with our thoughts and that one day technology will allow us to capture every intention of movement, such as moving a robotic hand. Well, today that technology is called electroencephalography (EEG) and with it we can record tiny electric currents coming from inside the brain off the surface of our scalp.

But can we see everything that is going on in the brain with non-invasive recordings? Not quite, because some of the deep brain sources may not be recordable even by the most modern BCI devices (normally they use an EEG cap fitted on the head of a person).

Let me explain the relation between finger movements and neuronal brain activity now. The simplest and most direct form of brain-machine interface is recording the motor-controlled BCI, specifically: the intention to move fingers (one type of motor-controlled BCI). Each finger movement depends on its neighbouring fingers (e.g., try to move your pinky and middle finger separately. Difficult, right?). This is because there are multiple locations of neurons active that control a single finger.

Evidence from a study I conducted about visual-motor control of fingers shows dependencies in the brain between far away groups of neurons, firing with similar time patterns to control one finger and thereby coordinating their dance in a whole brain network, when moving more fingers. So, if we want to see a network of neuronal sources inside the head we cannot use an external EEG cap directly. In fact, the activities of brain sources get all mixed up at the scalp, so it is difficult to say if a surface wave was coming from far away neurons or from the ones right below the electrode.

The prestige: the brain as a network of sources

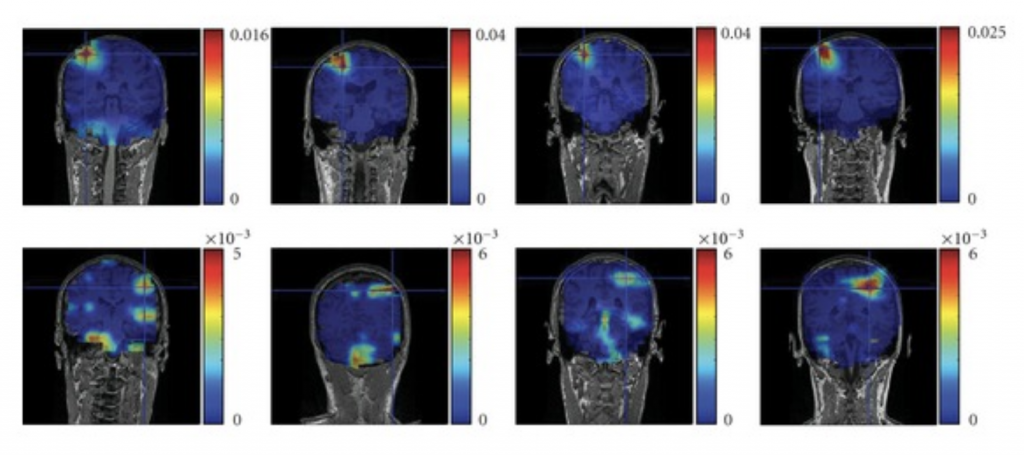

Hard evidence suggests that technology can come to the rescue of BCI. For example, we recruited volunteers that had to maintain a grip with their right hand. The goal was to measure the network of brain sources responsible for fingers contraction (i.e. index and thumb).

To answer this question, we measured brain waves with a magnetoencephalography (MEG) scanner (a sort of big hair dryer that is placed on top over your head) and the muscular signal of the right hand with surface electrodes. The results are shown in the picture below: highly correlated sources are present throughout the entire brain of four subjects. The message is that MEG (or EEG) can record whole brain neural activities in synch with finger muscles simultaneously. The whole brain access allowed by this technique can be a suggestion for a new kind of BCI.

Image courtesy of Cris Micheli (license)

Grand Finale

To wrap up our story about BCI, let us look at the pros and cons of modern techniques in the field of BCI. With a professional and costly set-up, we can measure many interesting things like the intention to move, the intention to speak, or the brain response to videos and songs. However, we can’t record the intention to make a complex decision. For example, we can’t measure others’ dreams, what object a person is thinking of, nor the grocery list in mind. Even the intention of movement will not be decoded with a few electrodes and simple algorithms because neuronal networks sometimes are buried deep in the head.

Alternatively, there are devices that measure neuronal activity more directly (a new light scattering technique pursued by Facebook, or intracranially grown electrodes with Elon Musk’s Neuralink project). However commercial BCI systems come with a subset of a few electrodes, therefore making unlikely the reconstruction of neuronal sources as previously described.

So, next time you hear about the wonders of ‘thought reading’ with simple off-the-shelf consumer electronics be mindful about the hidden trick: look for the ace in the sleeve of the magician!

Written by Cristiano Micheli, edited by Francie Manhardt and Monica Wagner, translated by Felix Klaassen Rowena Emaus