This post is also available in Dutch.

Recently, one of the founders of artificial intelligence Marving Minksy passed away. This was unfortunate as he could not celebrate the breakthrough in his field: a computer program that beat humans in a complex Chinese board game called ‘Go’. What was once a holy grail has now been achieved.

The field of artificial intelligence is emerging rapidly. This is partly due to ‘DeepMind’, a division of Google that focuses on Artificial Intelligence (AI). Last year, an algorithm was developed that could learn to play Arcadic games on the human level. Currently, their computer program ‘AlphaGo’ is able to beat humans in a century old complex and strategic board game called Go. The European Go champion Fan Hui lost five out of five games against the computer. AlphaGo was also able to beat the competing algorithms of Silicon Valley, such as the one by Facebook, in almost every single try.

Short movie about AlphaGo

More complex than chess

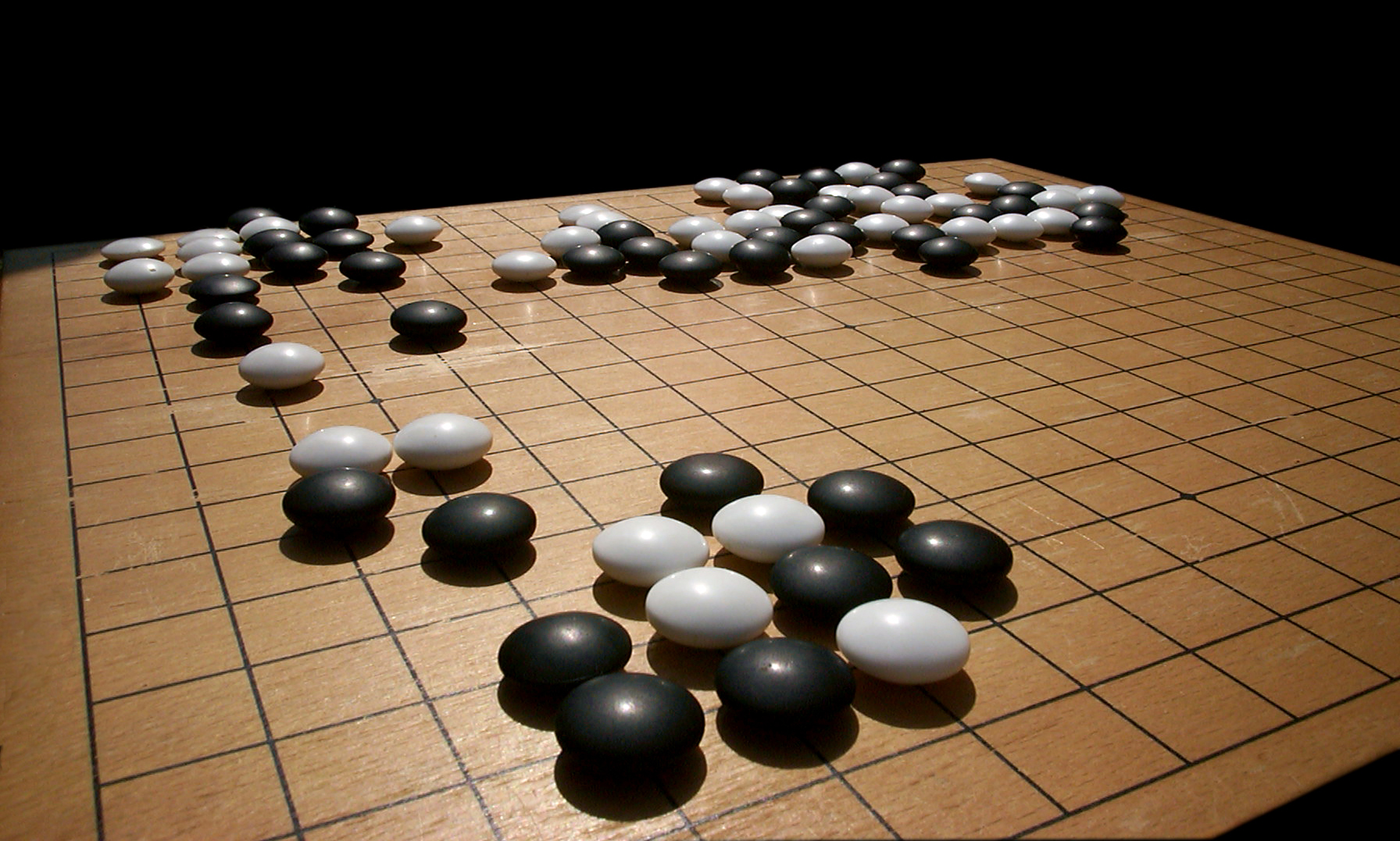

After a computer was able to beat the great chess master Gary Kasparov in 1997, the Asian game Go had always been seen as the holy grail for AI researchers because of its high degree of complexity. The idea of the game is quite simple: to conquer territory by placing black and white stones on a board of 19 by 19 squares. The complexity lies in the fact that 150 different moves are possible and about 10170 different configurations. Algorithms which tried to use brute force and calculate all the possible options often failed.

Deep neural networks

DeepMind made use of the so-called ‘deep neural networks’ to recognize patterns in the game. The networks consist of different layers of neurons with connections that are enhanced by experience and reward. Under this network system, the program AlphaGo was exposed to 30 million configurations from games played by Go-experts. The program extracted the information from the different board setups and used the information to play against itself in a thousand games. It was rewarded for each game won. In this manner, the the combination of pattern recognition and use of rewards made the program a Go-expert.The approach used by Deepmind is revolutionary, because their networks are able to easily learn different tasks. Due to the general mechanism that underlies deep neural networks it can be applied to every other domain of AI which uses complex pattern recognition.

Limitations

Marvin Minksy discussed the problems of neural networks in his famous book ‘Perceptrons’ in 1969. He argued that neural networks alone were insufficient to produce intelligence on a human level. Did Deepmind prove him wrong? For now, not yet. Even though neural networks are able to learn different tasks, they experience difficulties transferring the acquired knowledge to a different task. For each task the program has to start learning from scratch. As such, there remains a lot to do before AI becomes a real challenge for human intelligence.

More information

Blog about AlphaGo from Google

Reference about AlphaGo

Necrology Marvin Misky

This blog is written by Ruud Berkers